At Hotseat, we’ve taken on the very challenging task of answering legal questions based on reading hundreds of pages of regulations. The terrain is full of subtleties in language and meaning, requires non-trivial reasoning, and filled with situations where mistakes can be very costly.

While we have strikingly promising results, I want to spell out our core belief: AI lawyers won’t replace human lawyers anytime soon, but AI will hand these knowledge-intensive workers real superpowers. Think of ‘bicycle for the mind’ but applied to a new frontier (text understanding at a vast scale), rather than almighty AI overlords stealing jobs left and right. Humans will be in the driving seat, supervising AI.

The legal domain is fraught with challenges, so here I want to talk about Hotseat’s anatomy - product design choices that take a raw and nascent technology of Large Language Models and turn it into a functional tool.

Read before you answer

When using a product like ChatGPT, all answers you get from AI are based on its internal, lossy memory that was filled in during the training process. The AI behaves a little like a student who studied a subject months ago. When faced with a detailed question, instead of saying “I don’t know,” it tries to make up something plausibly sounding based on broad recollections of what they previously learned and pass it as an answer. By default, ChatGPT, when pressed hard into a knowledge corner, will confabulate like a pro.

However, not everything is lost: ChatGPT still has a very broad understanding of the world but is missing the details. We can take this raw ability and ground it in specific knowledge. We tell it to read before it answers. Grounding is the critical technique that shifts AI attention from internal, lossy memory to hard facts and vastly improves the quality of its answers.

(for technical readers: yes, I’m describing Retrieval Augmented Generation, but God is in the detail)

Legal trace

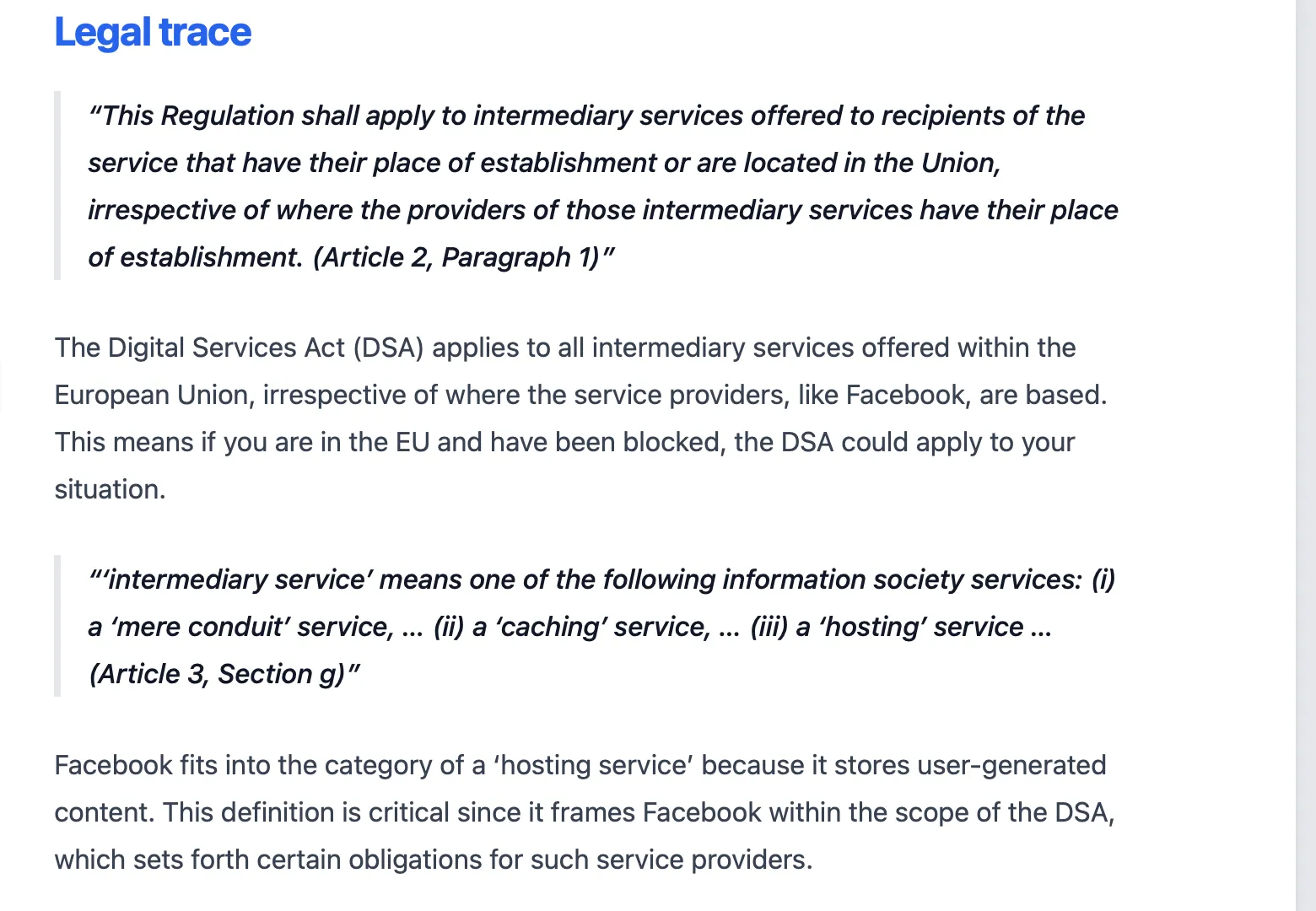

Another choice we’ve made is to force AI to give answers in a particular format of interleaved quotes from a legal text and commentary that explains how this quote ties to the original user question. We call this form of an AI answer a legal trace.

Mistakes are dire, and with the legal trace, we want to make it cheaper for humans to catch them. As a side-effect of forcing this answer format, we also dramatically reduced the number of reasoning mistakes. It’s grounding once again but with an external-facing proof. Instead of trusting the AI that it has read the sources, we say, “Show us the receipts.”

An example of “the receipts”.

Question refinement

When you ask a legal question, even as a specialist, you’re faced with a gap between what you know about your situation and what needs to be laid out in question to answer it well. Suppose we’re talking about answering a question about a single regulation (e.g., the Digital Services Act). In that case, you need to contrast the user question with the regulation’s text to see what terms it defines and what areas it covers to figure out what might be missing.

Hotseat’s AI performs this type of contrastive analysis and forms a series of follow-up (helper) questions to gather all necessary details. Yes, AI is asking humans questions. I wrote a separate blog post about this feature where you can see what it looks like in practice.

One common question I’ve encountered is: where do those follow-up questions come from? The simple answer is that AI prepared them. But did we, the creators of Hotseat, specify how these questions look like in detail, and is all this a parlor trick?

No, what you see in question refinement is a collision of the general understanding of the world (lots of common sense), reading the entire text of a regulation, and reading the user’s question. The AI uses its common sense reasoning to interpret the user’s intent, contrast it against the precise text of the regulation, and create clarifying questions consistent with the user’s guessed intent.

Point queries

One behavior people notice is that the question refinement process inevitably leads to “point queries” - these are very detailed questions about a particular case/situation to solve by analyzing the regulation. Hotseat prefers questions that are specific to your business or product, detailed, rather than broad. For example, a good question will look like this:

I operate a website that lets users book appointments with doctors and review them later. Reviews are public. What kind of product choices or constraints related to user reviews do I need to consider that are influenced by the Digital Services Act?

This is in contrast to more general inquiry such as “What are the content moderation rules according to the Digital Services Act?” The former type of question is much more likely to be answered unambiguously and in a way that enables the user to easily verify the answer.

It’s worth remembering that AI is infinitely patient. You can ask numerous point queries, which is akin to having a legion of lawyers on retainer, but at a very attractive hourly rate.

System 2 thinking

Hotseat uses extensive behind-the-curtain deliberation and processing to arrive at the answer. In more technical terms, we employ various techniques that shift Large Language Models’ (AI’s) “thought” process from System 1 to System 2, borrowing Daniel Kahneman’s classification as an analogy.

A Pile of PDFs

Common feedback I receive is that Hotseat’s exclusive focus on the text of a single regulation at a time holds little practical value. Legal questions often need to be analyzed from multiple angles and by consulting multiple regulations or regulatory regimes. And even if you have the luxury of focusing on a single regulation such as EU’s GDPR you still need to take into account “a pile of PDFs”: official guidelines, court rulings, fact sheets, executive decisions, etc.

My focus on a single regulation to determine whether the reasoning capabilities of AI are up to the task. If AI fails to reason about a single regulation, there’s little reason to expand the scope to a larger problem. However, counterintuitively, if single regulation reasoning meets the bar of legal analysis, scaling it to a mountain of PDFs is a low-risk endeavour.

(To readers outside Europe: here I’m assuming civil law tradition, where regulations are the primary source of law.)

Update: this has been added to Hotseat, a blog post us coming soon.

High-caliber answers

Combined, these design choices have made us real strides towards creating a product experience up to the challenge of analyzing hundreds of pages of dense legal text and answering sophisticated questions in minutes instead of hours or even days. If you want to learn more, check out our website or contact me at greg@hotseatai.com with any questions.